Elevating data security: Ingest data from an Azure Event Hub secured by Entra ID

If you’re just here for the practical example, skip ahead.

Are you still relying on static connection strings or shared access signature (SAS) keys to protect your sensitive data streams in Azure Event Hubs? While convenient, these methods can introduce security vulnerabilities. This blog demonstrates a more secure and modern approach.

Why Entra ID and OAuth are essential for a secure pipeline

Azure Event Hubs offers a scalable, resilient platform for real-time data streaming, suitable for various uses, such as collecting telemetry and sensitive log data. Robust authentication for data ingestion is not just a best practice — it's a necessity. This is particularly true when dealing with sensitive information that requires centralized management and secure access.

We will guide you through the process of configuring your Logstash Kafka input to authenticate directly against Microsoft Entra ID (formally known as Azure Active Directory or Azure AD) using the OAuth 2.0 protocol. By using the SASL/OAUTHBEARER mechanism, you can move beyond traditional, less flexible authentication methods, such as SASL/PLAIN with hardcoded credentials. Instead, you can embrace a more secure, centralized, and manageable approach to authentication with Entra ID.

Why move to Entra ID and OAuth?

Centralized identity management: Align with Microsoft's best practices by managing access through Entra ID, the central authority for identity and access management.

Enhanced security: Utilize expiring tokens instead of long-lived static credentials, significantly reducing your attack surface.

Simplified auditing: Streamline your security audits with centralized and consistent access policies.

Modern authentication: Adopt the OAuth 2.0 protocol, the industry standard for secure, delegated access.

Before Elasticsearch versions 8.18 and 9.0, both Logstash and Elastic Agent used an SAS to consume data from Azure Event Hubs. While SAS is a convenient connection method, Entra ID centralizes identity and access management for authentication and authorization, aligning with Microsoft's best practices.

From the 8.18/9.0 release, Logstash's Kafka input has been updated to support OAuth 2.0. This update allows Logstash to connect to Azure Event Hubs via Entra ID by utilizing the Azure Event Hubs Kafka endpoint.

The benefits are clear. Now, let's review what you'll need before starting the configuration.

Before you begin: Your preflight checklist

Logstash: Version 8.18+ or 9.0+ deployed

Azure Event Hub: Standard tier or higher, with the Apache Kafka Endpoint enabled

Azure Entra ID: A P1 or P2 plan

- Permissions: Sufficient privileges in Azure to create a new App Registration

Azure configuration: A 3-step guide

An application registration in Entra ID is necessary to obtain the required credentials. Then we utilize the Java Authentication and Authorization Service (JAAS) in Logstash to authenticate. Get ready to fortify your data pipelines and streamline your authentication workflow.

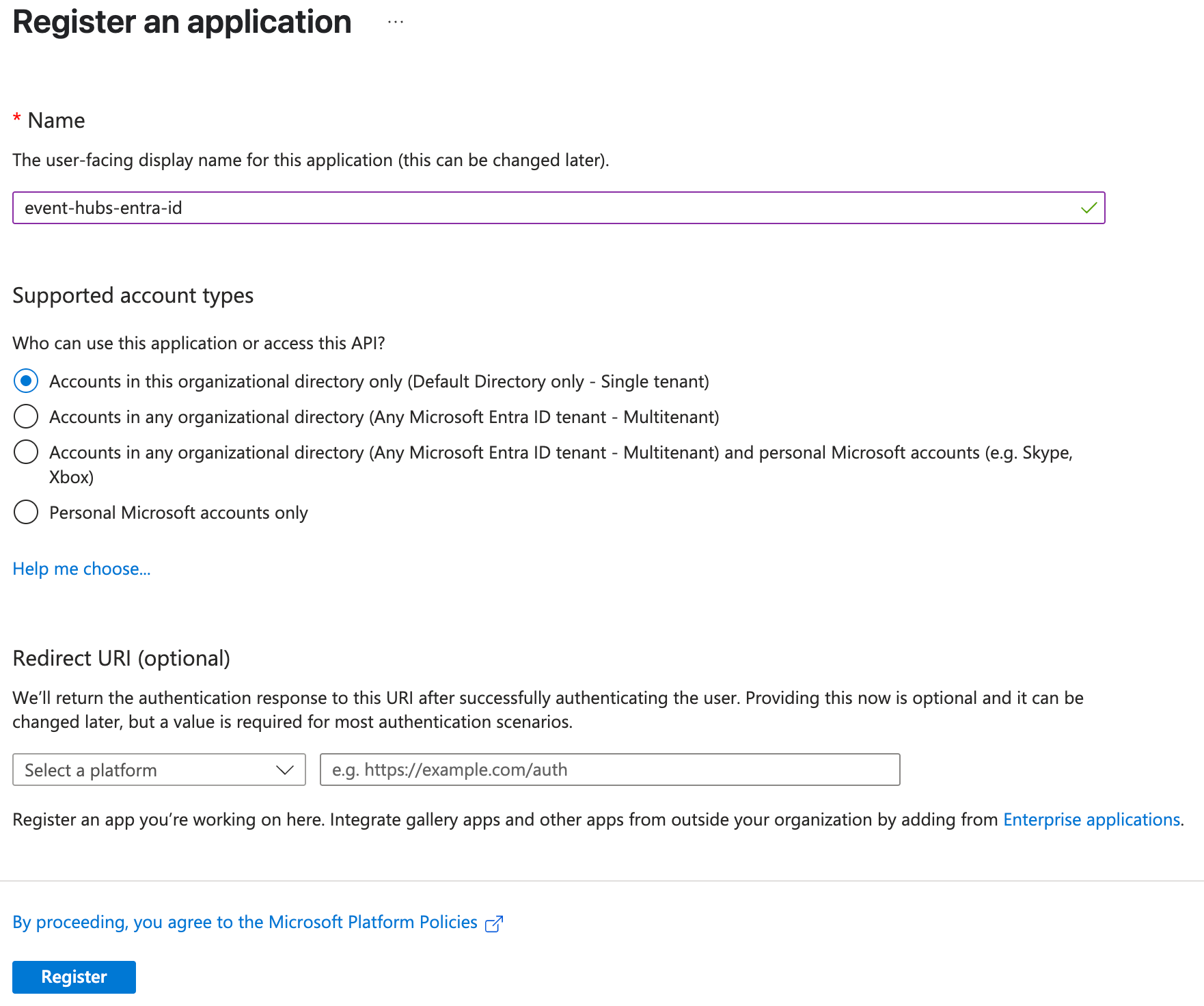

Step 1: Registering the application in Azure

Navigate to the Azure Portal and search for “App Registrations.” On the App Registrations page, add a New Registration. Provide a name, and modify the supported account types if you wish. The Redirect URI can be left empty. Then click Register.

Step 2: Generate and store a Client Secret

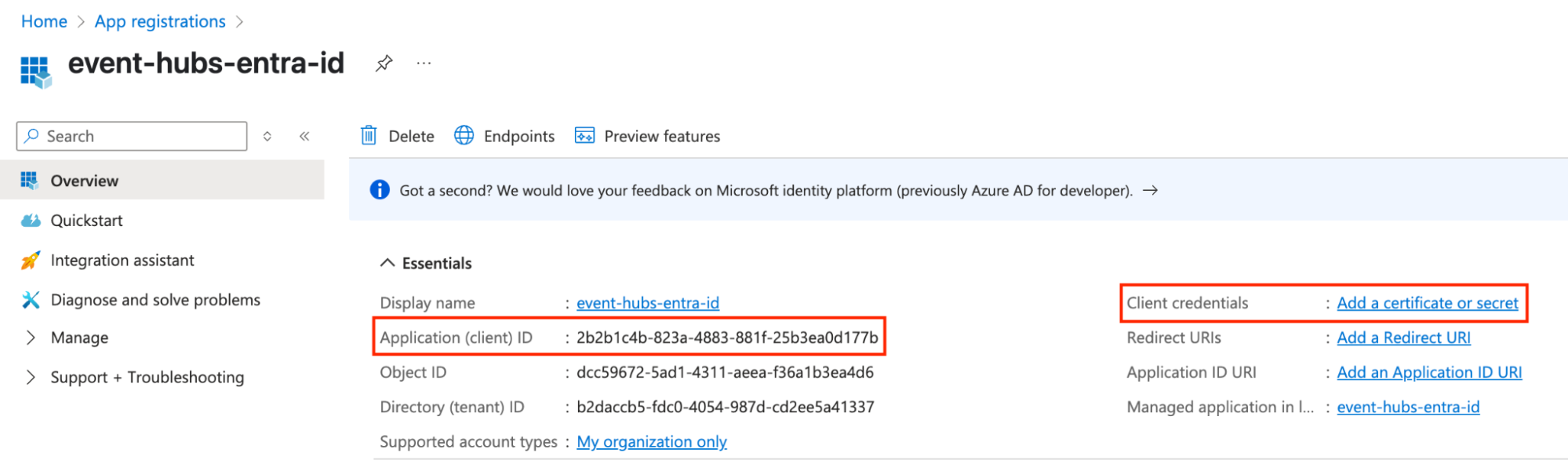

After registering your application, you will be redirected to the details page for your app registration. Here you can find the Application (client) ID and also generate a client credential. Click Add a certificate or secret.

Add a New Client Secret, provide a name, and set an appropriate expiry. Take note of the Secret Value as it will not appear again.

Step 3: Assign Event Hub Data Receiver Role

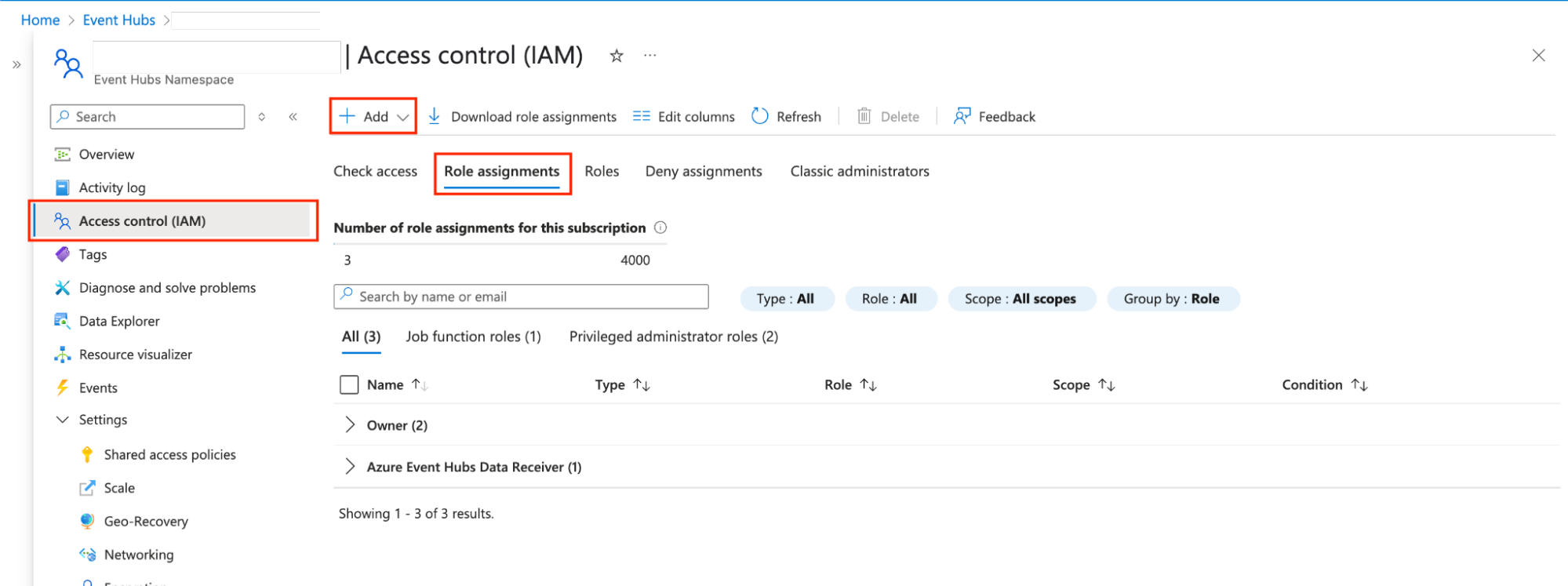

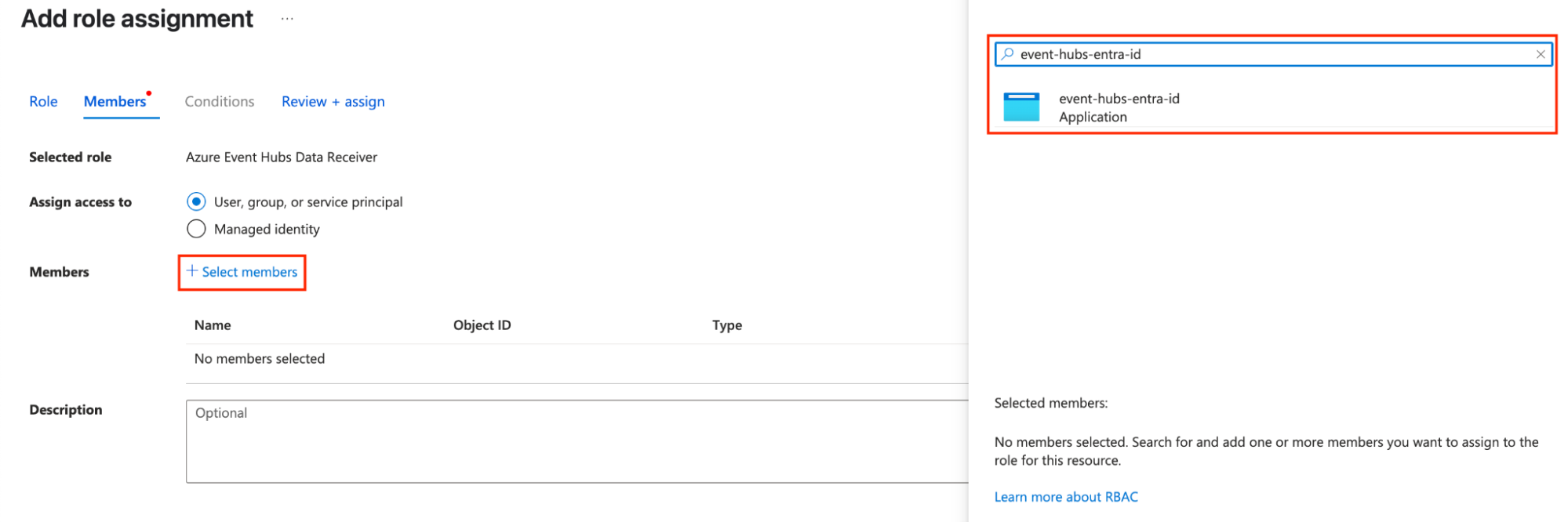

Now that you have an application registered to Entra ID and have the credentials, the last step is to allow this application to read from Event Hubs. Navigate to the Event Hub you wish to read from and click Access Control (IAM) > Role Assignments > Add.

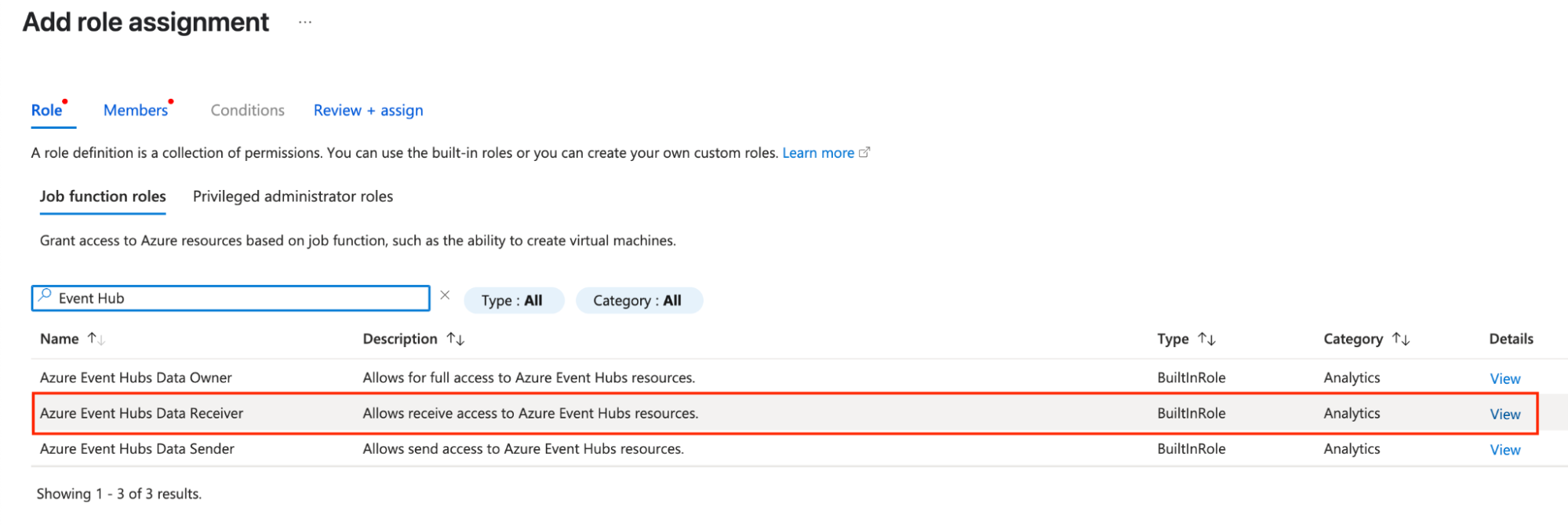

On the Add Role Assignment page, first add the Job Functions Role. Search for “Event Hub” and select Azure Event Hubs Data Receiver.

Then click the Members tab, select User, group or service principal, and click Select members. Search for the application name you created in Step 1.

Click Review and Assign to complete the role assignment.

Now you are ready to configure Logstash to read from Event Hubs using Entra ID.

Logstash configuration

Step 1: Choosing the right Logstash input: Why the Kafka input wins for OAuth

The azure_event_hubs-input plugin is specifically designed for Azure Event Hubs. However, its primary authentication mechanisms revolve around connection strings, which often embed SAS keys.

At the standard tier and above, Azure Event Hubs provides an Apache Kafka-compatible endpoint. This compatibility means that standard Kafka client applications, such as Logstash's kafka-input plugin, can directly connect to Event Hubs. As of 8.18/9.0, the kafka-input is capable of utilizing the OAUTHBEARER authentication mechanism, which is used for Entra ID. This native support within the Kafka plugin makes it the ideal choice when authenticating via Entra ID is required.

Step 2: Configuring Logstash Kafka input with Entra ID OAuth

The following configuration snippet provides an example of a Kafka input configuration for OAuth.

Important: Always secure sensitive data using the Logstash keystore.

input {

kafka {

## The FQDN of your Event Hubs namespace, using the Kafka port

bootstrap_servers => "${EVENT_HUB_NAMESPACE}.servicebus.windows.net:9093"

topics => ["my_topic"]

group_id => "$Default"

codec => "json" ## Assumes incoming data is in JSON format

## --- OAuth Configuration ---

security_protocol => "SASL_SSL" ## Enforces encryption and SASL authentication

sasl_mechanism => "OAUTHBEARER" ## Specifies OAuth 2.0 as the mechanism

sasl_oauthbearer_token_endpoint_url => "https://login.microsoftonline.com/${TENANT_ID}/oauth2/v2.0/token"

sasl_login_callback_handler_class => "org.apache.kafka.common.security.oauthbearer.secured.OAuthBearerLoginCallbackHandler"

## JAAS config with credentials for the Entra ID token endpoint

## IMPORTANT: Use Logstash Keystore for CLIENT_ID and SECRET_VALUE

sasl_jaas_config => 'org.apache.kafka.common.security.oauthbearer.OAuthBearerLoginModule required clientId="${CLIENT_ID}" clientSecret="${SECRET_VALUE}" scope="https://${EVENT_HUB_NAMESPACE}.servicebus.windows.net/.default";'

}

}

filter {

}

output {

elasticsearch {

${YOUR_CLUSTER_DETAILS}

}

}The following table provides a quick reference for the key Kafka input parameters relevant to this configuration:

| Parameter | Example value/format | Explanation |

| bootstrap_servers | "${EVENT_HUB_NAMESPACE}.servicebus.windows.net:9093" | Address of the Event Hubs Kafka endpoint |

| topics | ["my_topic"] | An array of Event Hub (topic) names to consume from |

| group_id | "your_logstash_consumer_group" | Kafka consumer group ID for this Logstash consumer |

| security_protocol | "SASL_SSL" | Specifies encrypted communication with SASL authentication |

| sasl_mechanism | "OAUTHBEARER" | Specifies the OAuth 2.0 SASL mechanism |

| sasl_jaas_config | 'org.apache.kafka.common.security.oauthbearer.OAuthBearerLoginModule required...;' | JAAS configuration string for OAuth (See detailed breakdown below.) |

| oauth.token.endpoint.uri | "https://login.microsoftonline.com/${TENANT_ID}/oauth2/v2.0/token" | (Within sasl_jaas_config) Entra ID token endpoint |

| oauth.client.id | "${CLIENT_ID}" | (Within sasl_jaas_config) Application (client) ID from Entra ID app registration in Azure Configuration Step 1, stored in logstash-keystore |

| oauth.client.secret | "${SECRET_VALUE}" | (Within sasl_jaas_config) Reference to the client secret generated in Azure Configuration Step 2, stored in logstash-keystore |

| oauth.scope | "https://<eventhub_namespace>.servicebus.windows.net/.default" | (Within sasl_jaas_config) Scope required for accessing Event Hubs |

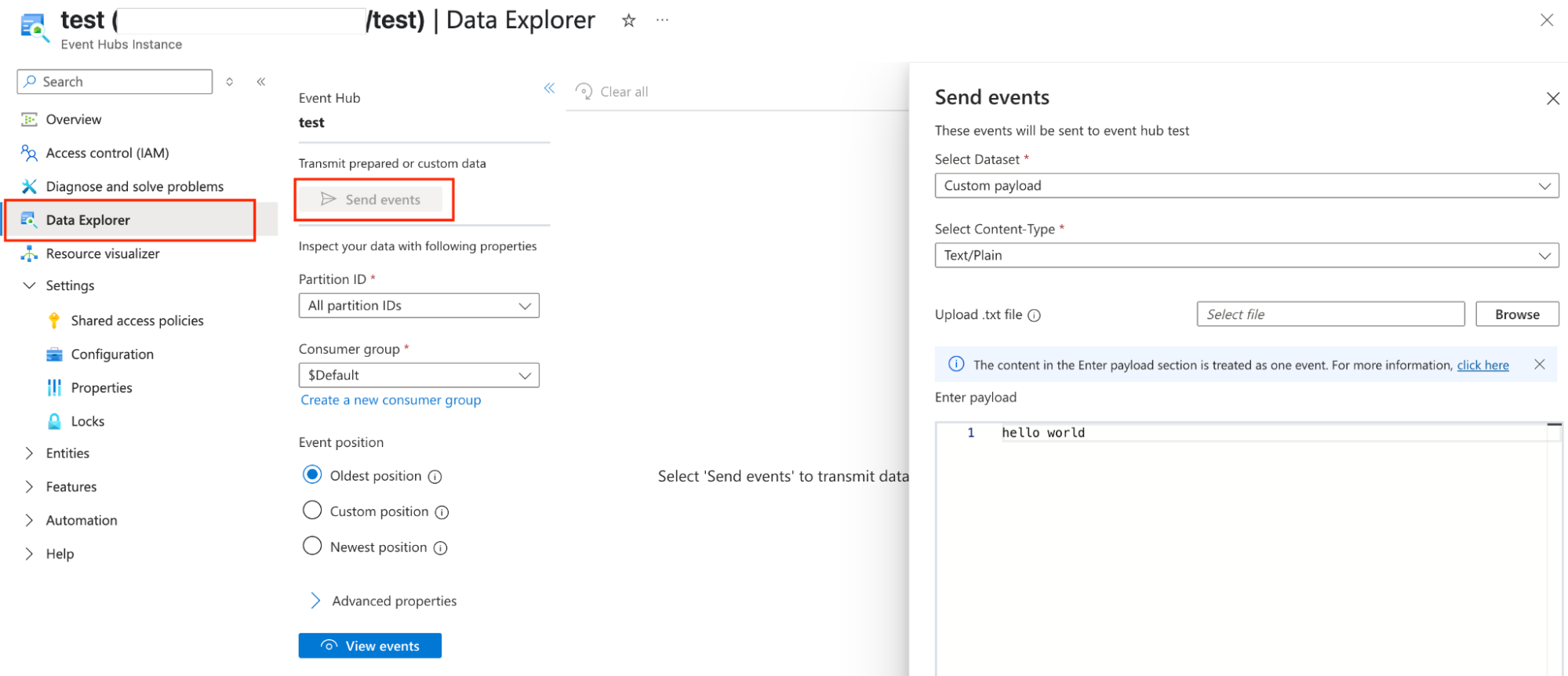

Testing and verification

Now, all we need to do is test the configuration. A fast way to test if your configuration is working is to use Event Hubs Data Explorer. This allows you to write events directly into the Event Hub for testing purposes.

A practical use case

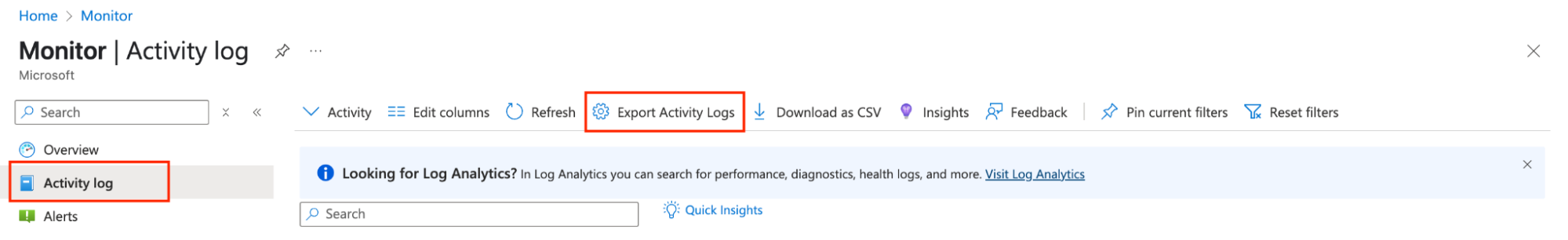

To see this secure pipeline in action, let's configure it for a common and critical use case: streaming Azure Activity Logs into Elastic. This enables robust security analysis and monitoring, all of which is protected by Entra ID authentication. In the Azure portal, search for Monitor, then navigate to Activity Log and Export Activity Logs.

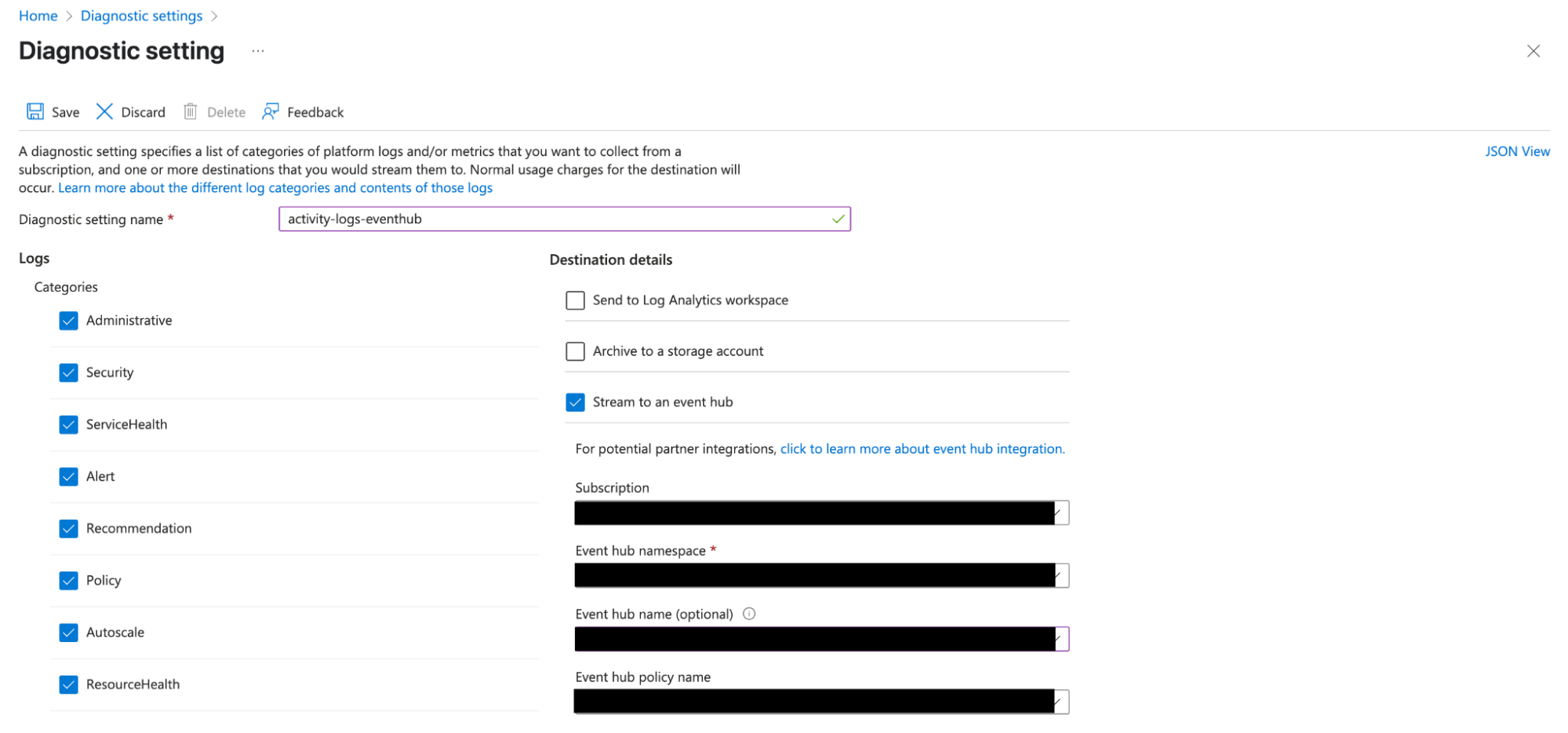

Next, configure a Diagnostic Setting to send the log categories to the Event Hub. In the Event Hub configuration section, select the Event Hub namespace configured in Azure Configuration Step 3. Additionally, provide the Event Hub name and an Event Hub policy name (SAS) with write permissions to the Event Hub.

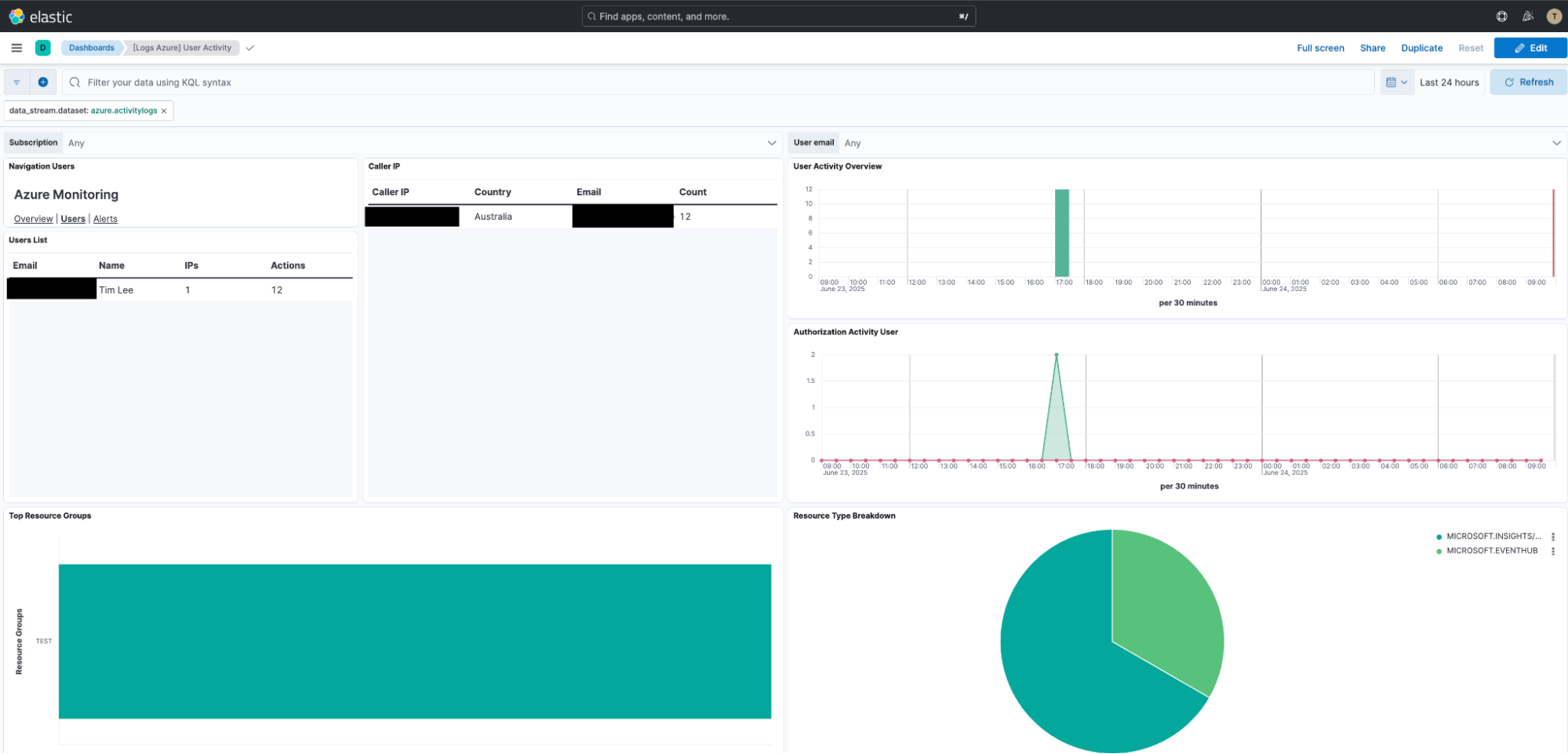

Once the diagnostic setting has been created, data should start appearing in the Event Hub within a few minutes. Elastic provides an Azure integration that includes ingest pipelines to parse the data and dashboards to help users with analysis.

Data from Azure Monitor is often sent to Event Hub as a JSON array containing multiple log records. To process this correctly in Elasticsearch, we need to perform two key transformations in the Logstash filter:

Split the Batch: The split filter separates the batched records array into individual events.

- Restructure for Ingest: The ruby filter then ensures each individual event is formatted as a JSON string under event.original, which is the format the Elastic Azure integration's ingest pipeline expects. This allows for automatic parsing and enrichment.

input {

kafka {

bootstrap_servers => "${EVENT_HUB_NAMESPACE}.servicebus.windows.net:9093"

topics => ["my_topic"]

group_id => "\$Default"

codec => "json"

security_protocol => "SASL_SSL"

sasl_mechanism => "OAUTHBEARER"

sasl_oauthbearer_token_endpoint_url => "https://login.microsoftonline.com/${TENANT_ID}/oauth2/v2.0/token"

sasl_login_callback_handler_class => "org.apache.kafka.common.security.oauthbearer.secured.OAuthBearerLoginCallbackHandler"

sasl_jaas_config => 'org.apache.kafka.common.security.oauthbearer.OAuthBearerLoginModule required clientId="${CLIENT_ID}" clientSecret="${SECRET_VALUE}" scope="https://${EVENT_HUB_NAMESPACE}.servicebus.windows.net/.default";'

}

}

filter {

## Split the Batched JSON into individual documents

split {

field => "records"

}

## Small Ruby script to json stringify the document so the ingest pipeline can process it

ruby {

code => "

hash = event.get('[records]').to_hash

string = hash.to_json

event.set('[event][original]',string)

"

}

## Remove the duplicate fields

mutate {

remove_field => ["records"]

}

}

output {

elasticsearch {

${YOUR_CLUSTER_DETAILS}

data_stream_type => "logs"

data_stream_dataset => "azure.activitylogs"

data_stream_namespace => "entraid" ## you can change this to any value

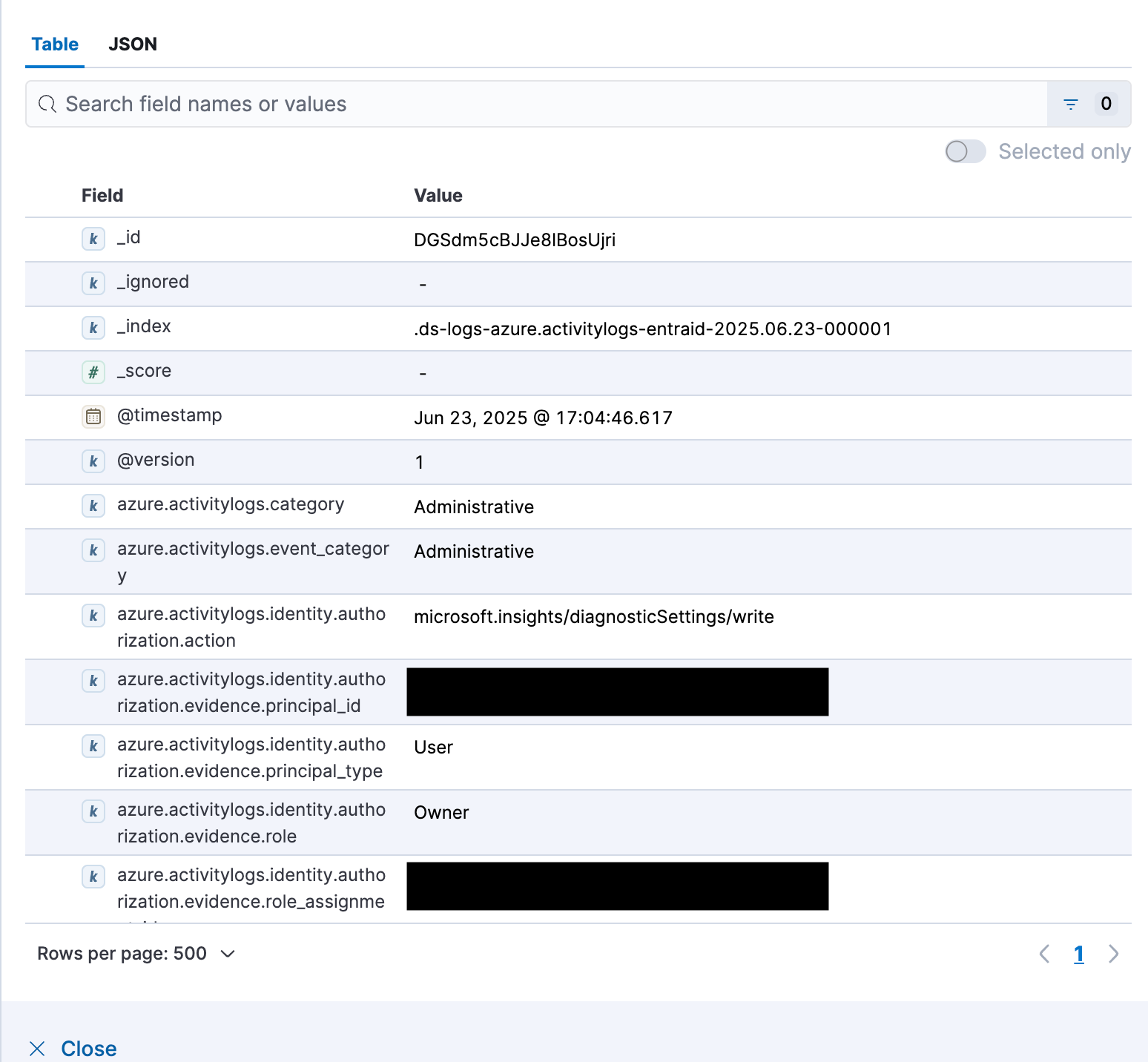

}

}Data is written into the logs-azure.activitylogs-entraid data stream as defined in the Logstash output configuration. This automatically activates the Azure activity logs ingest pipeline in Elasticsearch, processing the incoming data. The data is automatically ingested, and the out-of-the-box Azure Activity Dashboard lights up.

The path to a secure, robust data pipeline

By successfully integrating Entra ID for authentication with Logstash, you’ve taken a significant step toward a more secure and robust data pipeline. Moving away from static credentials to a modern, token-based OAuth 2.0 workflow not only minimizes your attack surface but also centralizes identity management, bringing your data ingestion process in line with today's security best practices.

Now is the time to translate this success into a broader security initiative. We encourage you to review all your data shippers and integration points. Are they using the most secure authentication methods available? A full audit of your security posture ensures that the principle of least privilege and centralized control are applied consistently across your entire observability and security infrastructure.

With your data ingestion pipeline fortified, you can more confidently focus on the next stage of your data journey: unlocking its full value. Securing the "front door" with Entra ID ensures the integrity of the data flowing into Elasticsearch. This foundation enables you to further enhance data accessibility for your teams and optimize storage strategies, potentially by using solutions like Elasticsearch logsdb index mode for cost-effective, long-term retention and analysis.

Take the first step today: Identify one critical data pipeline using a legacy connection method and use this guide to migrate it. Fortify your security, one pipeline at a time.

The release and timing of any features or functionality described in this post remain at Elastic's sole discretion. Any features or functionality not currently available may not be delivered on time or at all.