Tetragon migrates to Elastic Cloud Serverless for enhanced performance

Tetragon Financial Group is a multi-strategy hedge fund managing around $30 billion and traded on Euronext. As staff engineer at Tetragon, I was tasked with evaluating solutions to scale embeddings and implement a vector database to develop AI applications to support our future investment strategy. We recently migrated to Elastic Cloud Serverless and wanted to highlight the challenges, decisions, alternatives, and benefits that have shaped our experience for anyone else who may be considering a similar journey.

The need for a vector database to store embeddings for internal documents

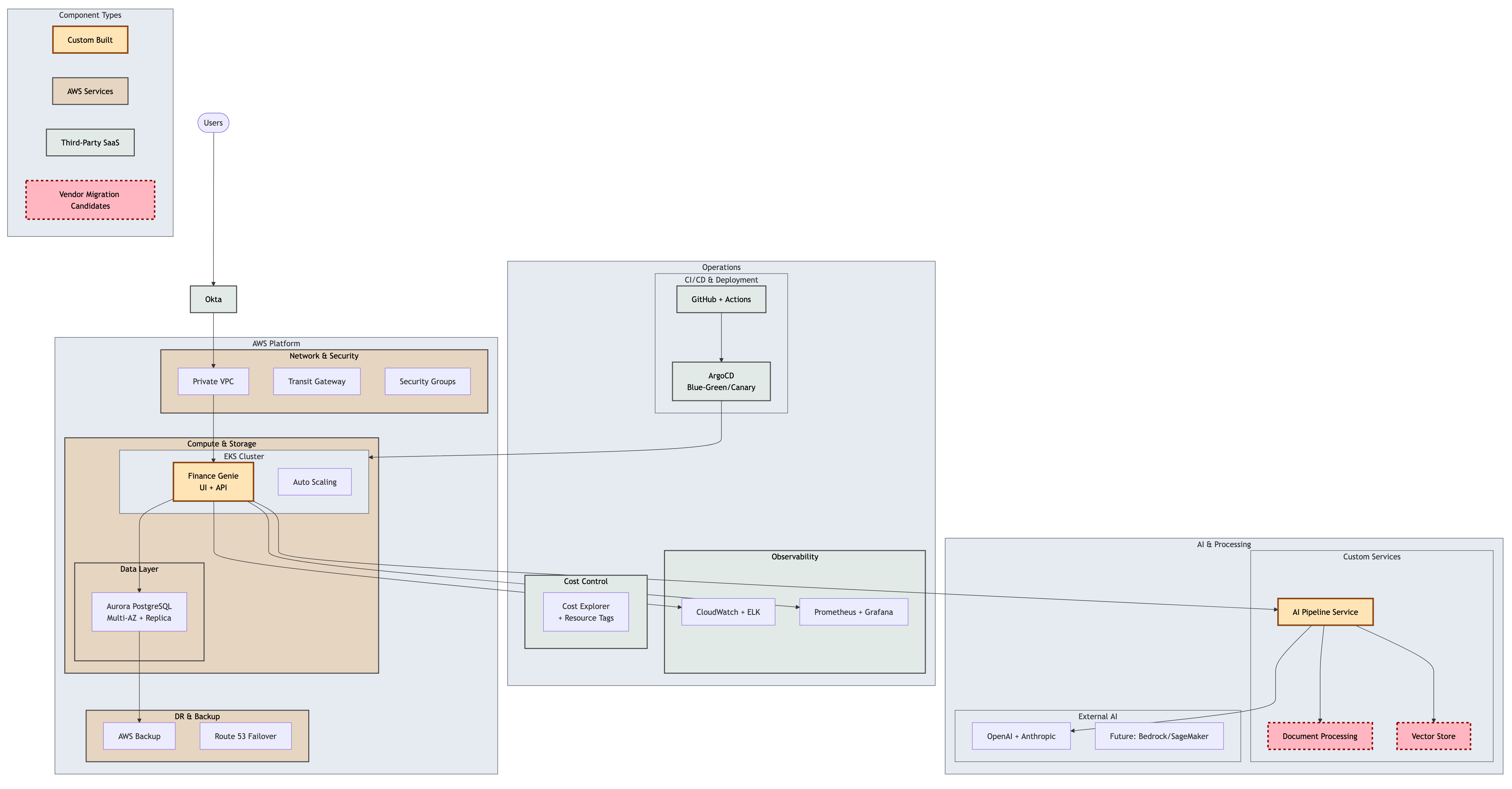

Our journey into AI development began in 2023, driven by the need to integrate AI capabilities into Tetragon’s operations. Internally, our technology team supports multiple funds and verticals. Our goal is to enable faster research and decision-making by centralizing broker notes, financial information, and research studies — data that is critical for investment decisions. With a focus on in-house development, our team sought scalable solutions for efficiency and performant storage needs, particularly for vector databases. Elastic — an already trusted partner for other applications for observability — emerged as a natural fit for our AI applications.

Data architecture and migration insights

Our current dataset is about 300GB (across 3,000–4,000 documents including replication and metadata, such as images and charts). But we expect this to grow 3x–4x in the coming months as we automate more ingestion processes. In addition to unstructured documents, we’re also embedding structured data from relational databases, aiming to make BI-like queries accessible through our AI platform.

Limitations with ChromaDB and Pinecone

Initially, we used ChromaDB for our vector database needs. However, the lack of a managed solution posed significant challenges for our lean team. We evaluated Pinecone (the only other AWS-native offering at the time) and pgvector with PostgreSQL, but both presented scaling or operational overhead issues. For example, PostgreSQL struggled with anticipated document volumes (upwards of 1 million for some teams), and Pinecone would have required additional DevOps investment.

The move to Elastic Cloud Serverless

The turning point came during a Confluent Kafka conference, where Elastic presented its serverless solution. Recognizing the potential, we connected with our Elastic account team for further discussion and soon recognized it as a viable option to solve our challenge. For us, the decision to develop on Elastic Cloud Serverless was driven by several factors, including:

Managed solution: With a small DevOps team of only seven developers, we wanted to minimize the overhead from infrastructure. So, the need for a managed infrastructure was critical. Not having to manage clusters or worry about upgrades freed up valuable bandwidth.

Scalability: The sporadic nature of our workloads made serverless an ideal choice, allowing us to scale efficiently without overcommitting resources. We appreciated the ability to scale up or down as usage evolved without having to pre-commit to a fixed cluster size.

Performance: Transitioning from ChromaDB to Elastic Cloud Serverless resulted in a 200% improvement in performance with query and retrieval times significantly reduced. This was partly due to the move from a self-hosted, resource-constrained infrastructure to Elastic’s optimized managed environment.

Easy migration process: The migration from ChromaDB to Elastic Cloud Serverless was remarkably swift, taking just two weeks. This rapid transition was facilitated by Elastic's comprehensive documentation and support from their team. Using frameworks like LlamaIndex, which supports Elastic as an abstraction layer, further streamlined the process.

Familiarity and integration: We were already using Elastic for logging and infrastructure monitoring, so expanding to serverless for vector search was a natural extension. Our team was comfortable with the Kibana UI and Elastic’s ecosystem.

Expansive coverage: As a global company with AWS as our preferred cloud provider, having geographic coverage is critical. Elastic Cloud Serverless gives us all the regions we need. As our data centers are primarily located in London and New York, it gave us the flexibility of localized data stores without worrying about the infra setup.

One technical challenge we encountered was around indexing strategy. Initially, we created one index per document to enable precision while using metadata-driven lookups like temporal queries. This was primarily driven by the need to overcome limitations of vector detection strategies and completeness. However, this led to a high number of small indices; and we’ve worked closely with Elastic to optimize this, balancing our unique requirements with platform best practices. We have since refined our data strategy as well to better suit the platform and are now moving to hybrid selection and using bigger large language model (LLM) context windows.

Results and benefits

Since migrating to Elastic Cloud Serverless, our team has reaped numerous benefits, including:

Improved performance: We observed a substantial boost in performance with queries and data retrievals being 200% faster than before. This was driven by moving to infrastructure but also by the ability of the cluster to autoscale.

Efficient scaling: The autoscaling capabilities of Elastic Cloud Serverless have been a game changer, allowing us to scale effortlessly as our internal usage grows.

Reduced management overhead: The managed nature of the serverless solution has freed up resources, enabling the team to focus on core development tasks.

- Smooth onboarding and documentation: The quality of Elastic’s documentation and the ability to quickly spin up a proof of concept (POC) were key to our rapid migration. Compared to competitors, Elastic’s onboarding experience stood out.

Lessons learned and advice

For teams considering a similar migration:

Elastic Cloud Serverless is especially well-suited for teams working with simple vectors and looking for fast onboarding.

Familiarity with Elastic’s stack and documentation can greatly accelerate migration and reduce friction.

Be mindful of indexing strategies. Aligning with platform best practices can help optimize costs and performance.

The ability to quickly run a POC and see value is a strong indicator of a good fit.

Use embedding models available within Elastic as they greatly simplify applications.

Looking ahead

As Tetragon continues to expand our AI capabilities, Elastic Cloud Serverless remains a cornerstone of our infrastructure. Our team is excited about the future with plans to use more of Elastic's features, including their machine learning embeddings provider. We are excited about how this product is developing and also appreciate the Elastic team’s help in keeping us updated as well as help with the deployment of these features.

Visit Elastic Cloud Serverless for more insights and updates or sign up for a free trial.

The release and timing of any features or functionality described in this post remain at Elastic's sole discretion. Any features or functionality not currently available may not be delivered on time or at all.

In this blog post, we may have used or referred to third party generative AI tools, which are owned and operated by their respective owners. Elastic does not have any control over the third party tools and we have no responsibility or liability for their content, operation or use, nor for any loss or damage that may arise from your use of such tools. Please exercise caution when using AI tools with personal, sensitive or confidential information. Any data you submit may be used for AI training or other purposes. There is no guarantee that information you provide will be kept secure or confidential. You should familiarize yourself with the privacy practices and terms of use of any generative AI tools prior to use.

Elastic, Elasticsearch, and associated marks are trademarks, logos, or registered trademarks of Elasticsearch N.V. in the United States and other countries. All other company and product names are trademarks, logos or registered trademarks of their respective owners.