Elasticsearch has native integrations with the industry-leading Gen AI tools and providers. Check out our webinars on going Beyond RAG Basics, or building prod-ready apps with the Elastic vector database.

To build the best search solutions for your use case, start a free cloud trial or try Elastic on your local machine now.

When working with RAG systems, Elasticsearch offers a significant advantage by combining a hybrid search (vector search + traditional text search) approach with hard filters to ensure the retrieved data is relevant to the user query. This makes models less prone to hallucination and, in general, improves your system quality. In this blog, we will explore how we can take a multimodal RAG system to the next level using Elastic’s geospatial search features.

Getting started

You can find the full source code used in this blog here.

Prerequisites

- Elasticsearch 8.0.0+

- Ollama

- cogito:3b model

- Python 3.8+

- Python dependencies:

- elasticsearch

- elasticsearch-dsl

- ollama

- clip_processor

- torch

- transformers

- PIL

- streamlit

- json

- os

- typing

Setup

1. Clone the repository:

2. Install required libraries:

3. Install and set up Ollama:

4. Configure Elasticsearch

- Make sure to have the following environment variables set:

- ES_INDEX

- ES_HOST

- ES_API_KEY

- Set the index mapping on Elasticsearch, put special attention to the geolocation and embeddings definition:

Run the application

1. Generate and index images’ embeddings and metadata:

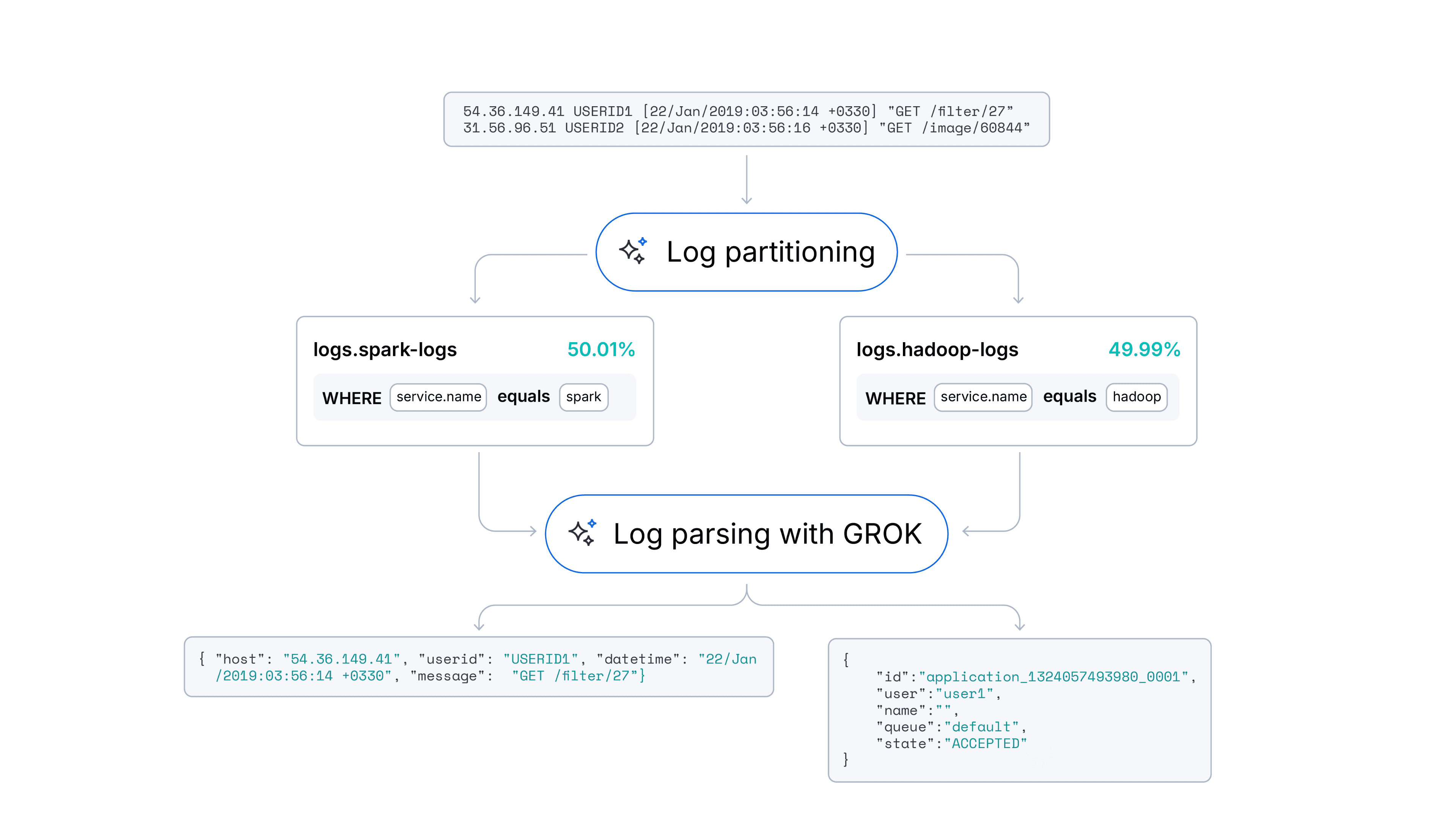

This file runs the data indexing pipeline. It processes the image metadata files and enriches them with multimodal embeddings (from the description and the image itself using the CLIP model). Finally, it uploads the documents to Elasticsearch. After executing this command, you should see the mmrag_blog index in Elasticsearch with the image metadata, geolocation, and image and text embeddings.

2. Run the streamlit app and use the UI in your browser with:

After executing this command, you can see the project webpage at http://localhost:8501.

The webpage is the interface for the RAG application. From there, you can ask a question, and then the assistant will extract the appropriate parameters from your question, run an RRF search on Elasticsearch to find related pictures, and formulate a response. It will also show some pictures from the results.

Implementation overview

To demonstrate Elastic’s RAG capabilities, we will build an assistant that can answer questions about national parks using relevant data. The search combines 4 approaches with data inferred from the user’s text query:

- Image vector search

- Text vector search

- Lexical text search

- Geospatial filtering

This allows our assistant to answer questions that are relevant and focused on what the user needs.

Now, how can the geospatial filter improve the assistant results? For example, if the user asks, “Where can I find canyons near Salt Lake City?” Without a geospatial filter, the assistant might suggest:

- Canyonlands National Park - Utah

- Grand Canyon National Park - Arizona

However, since we know the user is specifically looking for sites near Salt Lake City, it makes sense to look for answers in Utah. Therefore, the correct option is Canyonlands National Park only.

The implementation in this blog uses a geo_distance query to be able to find results (the picture’s geopoint) in a particular national park area. We are also using geoshapes to draw the parks’ areas.

However, Elastic capabilities with geo queries go well beyond that:

- geo_bounding_box query: Finds documents (geopoints or geoshapes) that intersect a specified rectangle

- geo_grid query: Finds documents that intersect a specified geohash, map tile, or H3 bin

- geo_shape query: Finds documents that are related (intersects, is contained by, is within, or a disjoint operation) to the specified geoshape

Dataset

We will use geotagged pictures from national parks obtained from Flickr. We augment these pictures with a description and vectorize both the image and description using the openai/clip-vit-base-patch32 model:

We merge these embeddings with the images’ metadata, and at the end, we get a document that looks like this:

A key benefit of using Elastic with geo positions is the Kibana Maps visualization. In Kibana, our dataset looks like this (note that we also added geo shapes for the national parks):

Zooming in, we can see the same document as before in Yellowstone. Additionally, Yellowstone Park’s (approximate) shape is also drawn in the layer below.

System architecture

Indexing pipeline

The indexing pipeline will handle the vectorization of both the image and description. It will also add more metadata to the image to create a document and index it to Elastic:

1. The starting point is pairs of images and metadata from national parks. This metadata includes the geolocation, title of the image, and a description.

2. We feed the image and description to the CLIP model (openai/clip-vit-base-patch32) to obtain an embedding of each in the same vectorial space of 512 dimensions. You can see the complete source code of this step here.

The process to generate an embedding from our image is:

- Load the image in RGB format using Image.open()

- Process the image by converting it into tensors, which is the format the model expects, using processor()

- Extracts a dense vector representation in 512 dimensions from the image using model.get_image_features()

- At the end, converts the PyTorch tensor into a flattened numpy array using outputs.numpy().flatten()

The process to generate an embedding from text is:

- Processes the input text, tokenizing it and converting it to tensors using processor()

- Parses the tokenized text using the model to extract its semantic features using model.get_text_features(). The resulting embedding also has 512 dimensions.

- Normalizes the embedding so the dot similarity can be computed using text_features / text_features.norm()

- Finally, it converts the embedding into a flattened numpy array using text_features.numpy().flatten()

We chose this model because it is a multimodal model that maximizes the similarity between image and text. This way, a description of an image and the image itself tend to generate embeddings that are close in the vector space.

3. We merge all the metadata, the description, geoposition, and embeddings from the image and description in a JSON file

We index the JSON file to Elastic using:

Where doc is the metadata for each image.

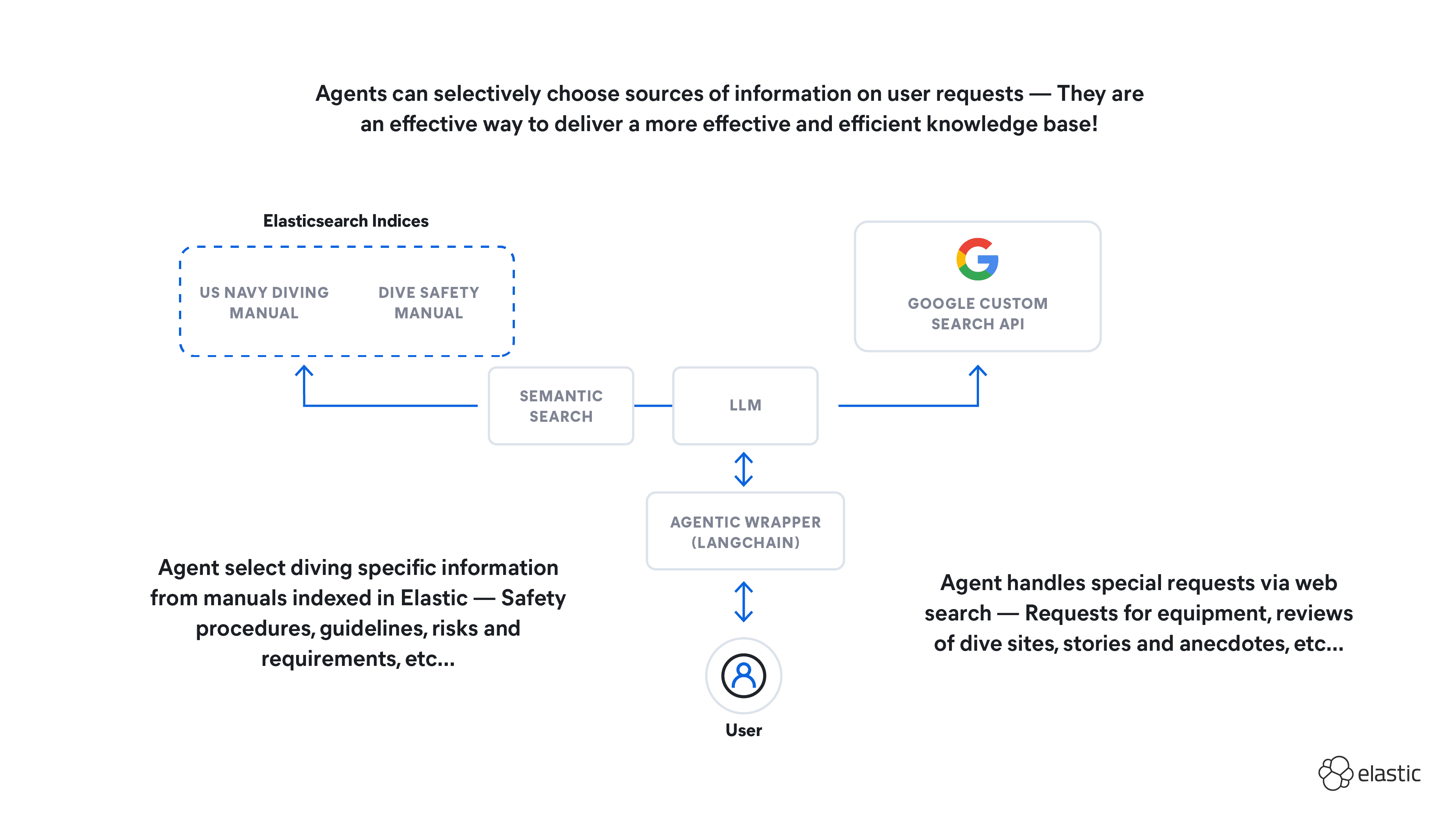

Search pipeline

This stage will handle the user’s query, create the search, and generate a response from the search results. The LLM used is cogito:3b with Ollama, though it could be easily replaced by any remote model—like Claude or ChatGPT. We chose this particular model because it’s lightweight and it excels at general tasks (as is expected from an assistant) compared to similar models (like Llama 3.2 3B). This means we get proper results without a long waiting time, and everything is running locally!

The pipeline works like this:

1. We receive an input from the user: Where can I see mountains in Washington State?.

2. We feed the user input and a dictionary of the parks, including their states and geolocations (defined in the same Python file), to the LLM with instructions to extract parameters for the Elastic query. The exact prompt is:

And this is an example of data in the parks_info dictionary:

The model extracts the following data from the prompt above:

3. We use the context_search parameter to generate a new embedding using the same CLIP model.

4. We extract the coordinates from the parks_info dictionary.

5. We use all these parameters to create an Elasticsearch query. This is the heart of the RAG feature:

- We create a geo_distance filter using the coordinates and the 'distance_km' parameter.

- We create a match text query against the ‘generated_description’ field.

- We create a standard retriever that uses the text query from the previous step and the geo_distance filter.

- We create two knn retrievers that use the embedding created in step 4 and match it against the image embedding and text embedding indexed on each document. Each retriever also uses the geo_distance filter.

- We use an RRF retriever to combine the resulting datasets of all the other retrievers.

This whole process is executed in the rrf_search() function:

At the end, we obtain a query like this:

6. Afterwards, we feed the documents obtained from Elastic and the user’s original query to the LLM with this prompt:

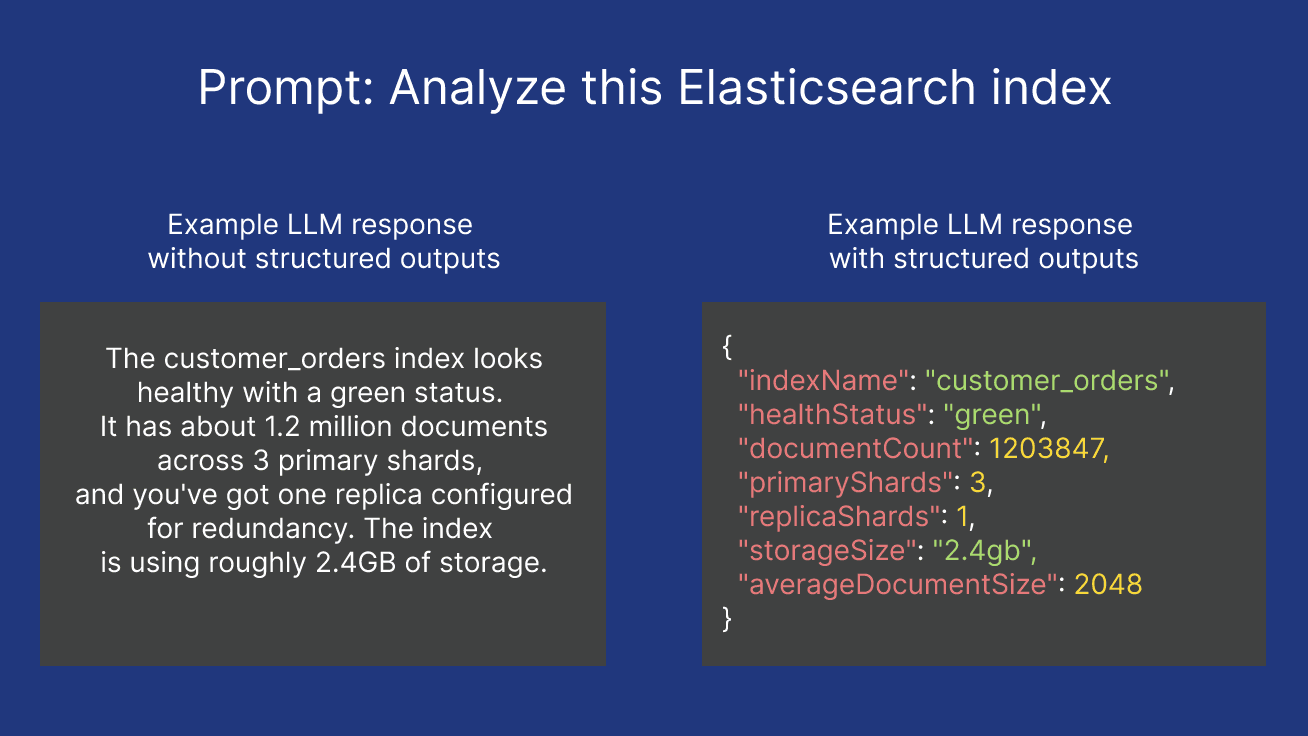

7. Finally, the LLM creates a response from the search results:

Web application

A front-end based on Streamlit handles the user input, runs the search pipeline to obtain the LLM final response, and displays images from the search results with their descriptions.

You can find the application source code and instructions here.

Multimodal RAG and geospatial search usage example

Query: Any places to ride a boat in Oregon?

Here, the LLM extracted these parameters:

And the search centered on Crater Lake National Park, so the response comes only from this national park in Oregon. This way, the system makes sure that it responds to the user under the given constraints and does not mention other parks where a boat ride is possible, but are not in Oregon.

Conclusion

In this article, we saw how integrating multimodal RAG capabilities with Elasticsearch's robust geospatial features significantly enhances the relevance and accuracy of search results in RAG systems. By combining image and text vector search with lexical search and precise geo-filtering, the system can provide highly contextualized answers. This approach not only minimizes hallucinations but also leverages Elasticsearch's diverse geo-query options and Kibana's visualization tools to deliver a comprehensive and user-centric search experience.